Documentation

Microsoft Dynamics 365 On-Premises

For a general introduction to the connector, please refer to RheinInsights Microsoft Dynamics 365 Connector. This connector supports Microsoft Dynamics 365 Server Version 8.x and above.

Dynamics 365 On-Premises Configuration

Our Microsoft Dynamics 365 Connector supports user based authentication against Dynamics and uses the REST APIs provided by your instance.

Therefore, it uses a crawl user for accessing the data. Authentication can take place via NTLM or Kerberos. We recommend that the user’s password does not expire.

Permissions

The crawl user needs to have the following read permissions.

System users

Teams

Team members

Business units

Roles

Role collections

Role privileges

Team roles

User roles

Accounts

Addresses

Annotations

Phone calls

Posts

App modules

Contacts

Contracts

Incidents

KB articles

Knowledge articles

Leads

Opportunities

Sales orders

Active Directory

Due to the nature of Dynamics 365 on-premises user ids, being sAMAccountNames, it might be needed to map these to userprincipalnames, i.e., mail addresses. Therefore, a separate user must be used for user name mapping leveraging Active Directory. This user must have read access to the users in the global catalog of your Active Directory.

Please refer to Ldap/Active Directory Security Transformer for the according configuration instructions.

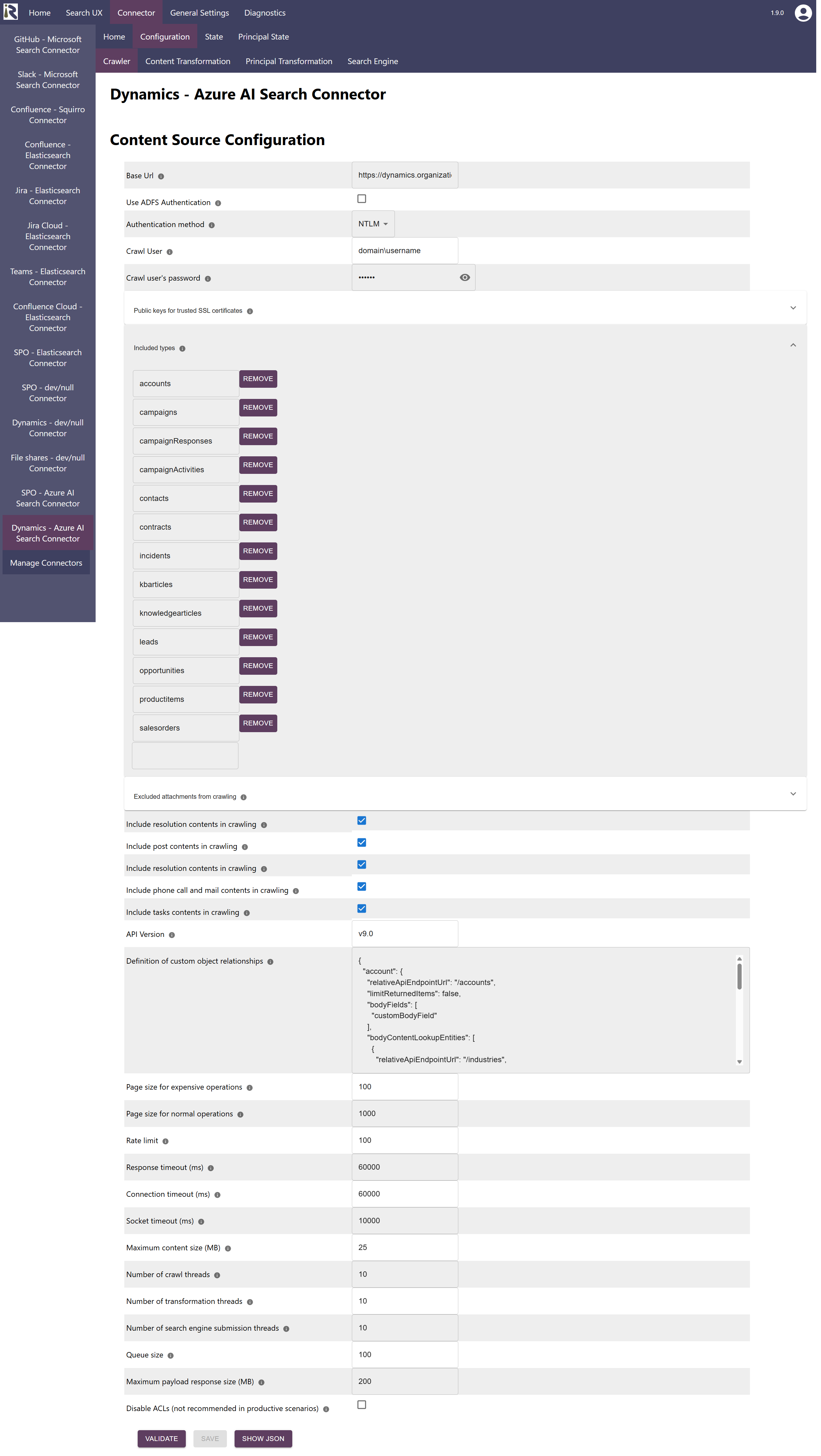

Content Source Configuration

The content source configuration of the connector comprises the following mandatory configuration fields.

Base URL. This is the root url of your Dynamics instance. Please add it without trailing slash

Mode: Please choose on-premises for your local deployment.

Authentication method. Here you need to choose NTLM or Kerberos.

Crawl user. This is the login name of the crawl user. The user must have the permissions as described above and the user name must be provided as domain\samaccountname.

Crawl user’s password. This is the according password of the crawl user

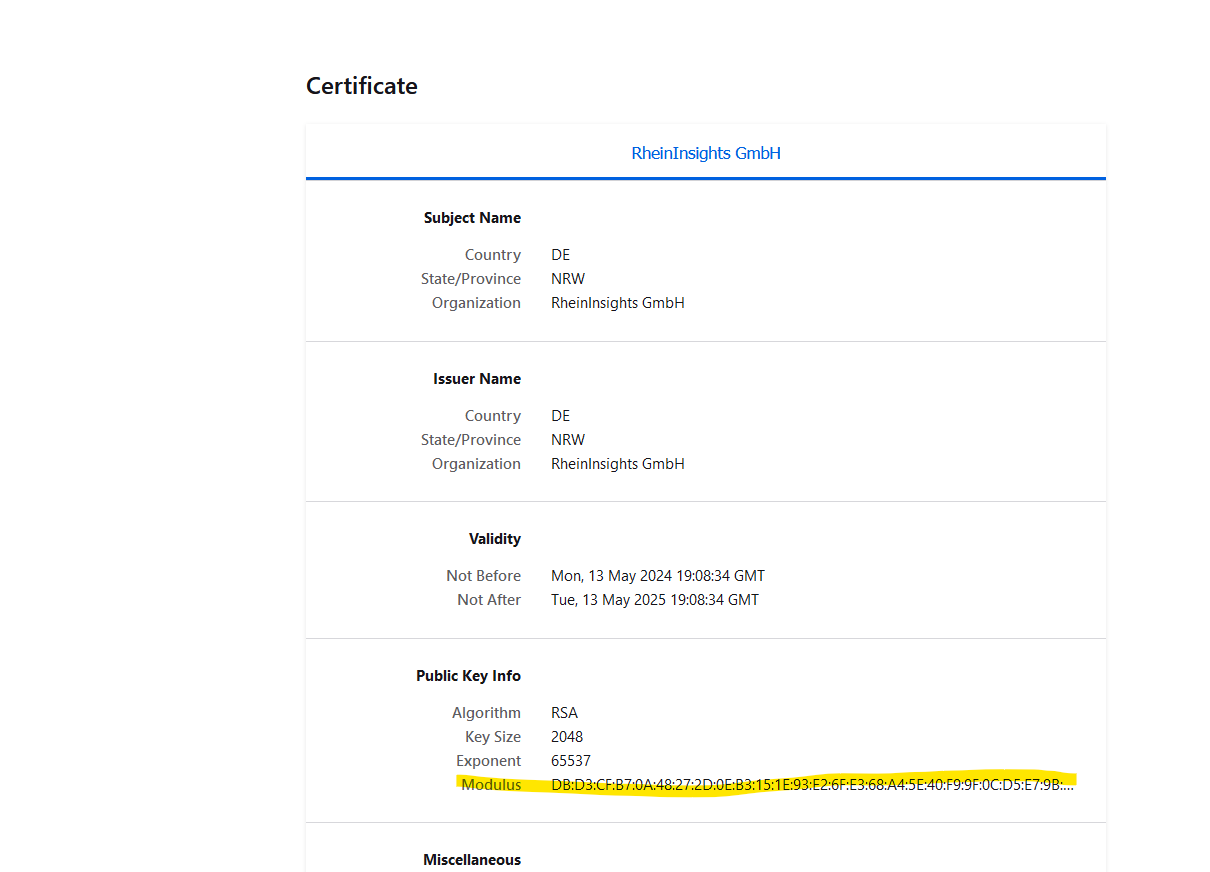

Public keys for SSL certificates: this configuration is needed, if you run the environment with self-signed certificates, or certificates which are not known to the Java key store.

We use a straight-forward approach to validate SSL certificates. In order to render a certificate valid, add the modulus of the public key into this text field. You can access this modulus by viewing the certificate within the browser.

Included types. This is a list of Dynamics Entities, which are included in a crawl. Indirectly, each entity is enriched, if applicable, with associated posts, notes, annotations or incident resolutions. The supported entity types are

accounts,incidents,contacts,contracts,kbarticles,knowledgearticles,leads,opportunities,salesorders.Excluded attachments: the file suffixes in this list will be used to determine if certain documents should not be indexed, such as images or executables.

Include post contents in crawling. If this is enabled, associated post entities are extracted and attached to the according parent entities as listed in included types.

Include resolution contents in crawling. If this is enabled, resolution notes are extracted and attached to the associated incident entities.

Include phone call and mail contents in crawling. If this is enabled, associated phone call entities are extracted and attached to the according parent entities as listed in included types.

Include tasks contents in crawling. If this is enabled, associated task entities are extracted and attached to the according parent entities as listed in included types.

Definition of custom object relationships. Here you can specify in a JSON format if you would like to augment the document bodies or document metadata by further fields. The connector can also perform a lookup against directly related objects. The format is the following

{ "accounts": { /* This is the name of an existing entity type */ "limitReturnedItems": false, /* determines if the operation should include a lower number of records */ "extendsExistingType": true, /* This value defines if an existing type (account) should be extended, if set to true it is important that account above matches an existing type. Otherwise the configuration will not be successfully validated. */ "bodyFields": [ /* here you can define entity fields which should be included in the document body. Each field can have a label (if not given, it is not shown), a fieldName (mandatory) and a fieldType (integer, double) to format the value accordingly. These fields will be added at the end of the default body. */ { "label": "Account Name", "fieldName": "name" }, { /* You can also add lookups and lookups in lookups. */ "relativeApiEndpointUrl": "/custom_industrycategory", /* is the API endpoint which should be used. */ "idFieldInForeignEntity": "industrycategoryid", /* the id filter in the $filter statement, for instance /custom_industrycategory?$filter=industrycategoryid eq value */ "idFieldInInnerEntity": "_custom_industrycategoryid_value", /* determines the id value to filter in the above statement */ "fields": [ /* provides a list of fields which then should be extracted from the matching records, same logic as in bodyFields */ { "label": "Industry", "fieldName": "name" } ], "canBeCached": true /* Determines if this lookup will be repeatedly done with the same ids from different top level records. So the connector will build up a cache which will reduce the number of API calls */ } ], "metadataFields": [ { "label": "Address 1 - Country", "fieldName": "address1_country" }, { /* You can also add lookups and lookups in lookups. */ "relativeApiEndpointUrl": "/custom_industrycategory", /* is the API endpoint which should be used. */ "idFieldInForeignEntity": "industrycategoryid", /* the id filter in the $filter statement, for instance /custom_industrycategory?$filter=industrycategoryid eq value */ "idFieldInInnerEntity": "_custom_industrycategoryid_value", /* determines the id value to filter in the above statement */ "fields": [ /* provides a list of fields which then should be extracted from the matching records, same logic as in metadataFields */ { "label": "Industry", "fieldName": "ap_name" } ], "canBeCached": true /* Determines if this lookup will be repeatedly done with the same ids from different top level records. So the connector will build up a cache which will reduce the number of API calls */ } ] }, "customentity": { "limitReturnedItems": false, /* determines if the operation should include a lower number of records */ "extendsExistingType": false, /* This is a new content type, you need to specify more information below. */ "relativeApiEndpointUrl": "/customentity", /* is the API endpoint which should be used. */ "modifiedOnField": "modifiedon", /* Defines the modifiedon date field. Used for filtering */ "idField": "customentity_id", /* Defines the idField of this entity */ "applicationPermissionRole":"prvReadCustomEntityRole",/* Permission role for this entity type for security trimming */ "titleField": "name", /* Defines the title field*/ "hasAttachments": false, /* If this type has annotations, set it to true */ "parentTitle": "Custom Entity", /* Defines the parentTitle metadata field */ "parentType": "customentity", /* Defines the parentType metadata field */ "entityType": "customentity", /* Defines the entity type, which is used in the search result click URL */ "itemType": "Custom Entity in Human Readable Form", /* Defines the itemType metadata and should be human readable */ "bodyFields": [ /* here you can define entity fields which should be included in the document body. Each field can have a label (if not given, it is not shown), a fieldName (mandatory) and a fieldType (integer, double) to format the value accordingly */ { "fieldName": "fieldName1" }, { "label": "Label", "fieldName": "fieldName2" }, { "relativeApiEndpointUrl": "/lookup", "idFieldInForeignEntity": "lookupid", "idFieldInInnerEntity": "_llokupid_value", "fields": [ { "label": "Another Label", "fieldName": "name" } ], "canBeCached": true } ], "metadataFields": [ /* here you can define entity fields which should be included in as metadat. Each field must have a label (the field name in the search schema), a fieldName (mandatory, a fieldName of the entity) and an optional fieldType (integer, double) to format the value accordingly */ { "label": "Metadata Label", "fieldName": "fieldName3", "fieldType": "integer" /* example for a field which must be interpreted as an integer */ }, { "label": "Metadata Label", "fieldName": "fieldName4", "fieldType": "double" /* example for a field which must be interpreted as an double. Doubles are formatted with two decimals */ }, { "relativeApiEndpointUrl": "/lookup", /* is the API endpoint which should be used. */ "idFieldInForeignEntity": "lookupid", "idFieldInInnerEntity": "_lookupid_value", "fields": [ { "label": Metadata Label", "fieldName": "fieldName" } ], "canBeCached": true /* Determines if this lookup will be repeatedly done with the same ids from different top level records. So the connector will build up a cache which will reduce the number of API calls */ } ] } }API Version. Please add the API version here which should be used by the connector. The connector then connects against <baseUrl>/api/data/<api version>/…

Index active entities only. Defines whether only (top level) entities with statuscode=1 should be indexed.

Maximum age of entities in days. Defines the oldest records (top level entities) to be indexed. This value is calculated based on the modifiedon fields. Records which move out of this window are deleted in the next full scan or recrawl.

Index active entities only. Defines if the connector should filter the main entities (as given above) by statuscode=1 or not.

Maximum age of entities in days. Defines the maximum age for indexing of the main records (as given above). If you do not want to filter out old records, set this value to 0.

If a value > 0 is given, only records which have modifiedon > crawl start date - given days will be indexed. Older records will be deleted during the next full scan or recrawl.Rate limit. This determines how many HTTP requests per second will be issued against Dynamics 365.

Response timeout (ms). Defines how long the connector until an API call is aborted and the operation be marked as failed.

Connection timeout (ms). Defines how long the connector waits for a connection for an API call.

Socket timeout (ms). Defines how long the connector waits for receiving all data from an API call.

The general settings are described at General Crawl Settings and you can leave these with its default values.

After entering the configuration parameters, click on validate. This validates the content crawl configuration directly against the content source. If there are issues when connecting, the validator will indicate these on the page. Otherwise, you can save the configuration and continue with Content Transformation configuration.

Recommended Crawl Schedules

Content Crawls

The connector supports incremental crawls. These are based on sorting as part of the Dynamics APIs, which is very limited and will generally only detect new entities but often not changed entities. Deletions are not detected in this mode at all. Incremental crawls should run every 15 minutes.

Due to the limitations of the incremental crawls, we recommend to run a Full Scan Crawl every few hours or daily.

For more information see Crawl Scheduling .

Principal Crawls

Depending on your requirements, we recommend to run a Full Principal Scan every day or less often.

For more information see Crawl Scheduling .