Documentation

Vectorizer and Embeddings

Using embeddings is one of the key ingredients for vector search and Retrieval Augmented Generation (see Retrieval Augmented Generation ). The Vectorizer stage uses an embedding provided by a large language model to generate a vector representations for the given document.

In particular, two vectors will be generated. One for the title field and one for the document contents.

The resulting vector(s) will later-on be indexed along with the “normal” document metadata and contents. This means, the vectors will be added as additional fields to the document before indexing.

Configuration Parameters

Type: here you can configure the type of your language model provider. The Suite supports Open Llama as a local model, as well as Azure OpenAI GPT. In future, more models are planned for being supported.

Open Llama configuration

Embedding model: here you can provide the name of the embedding model, you want to use. For example mxbai-embed-large

Use authentication. If enabled, the Suite can use basic authentication for communicating with the embedding endpoint. Please provide an according username and password.

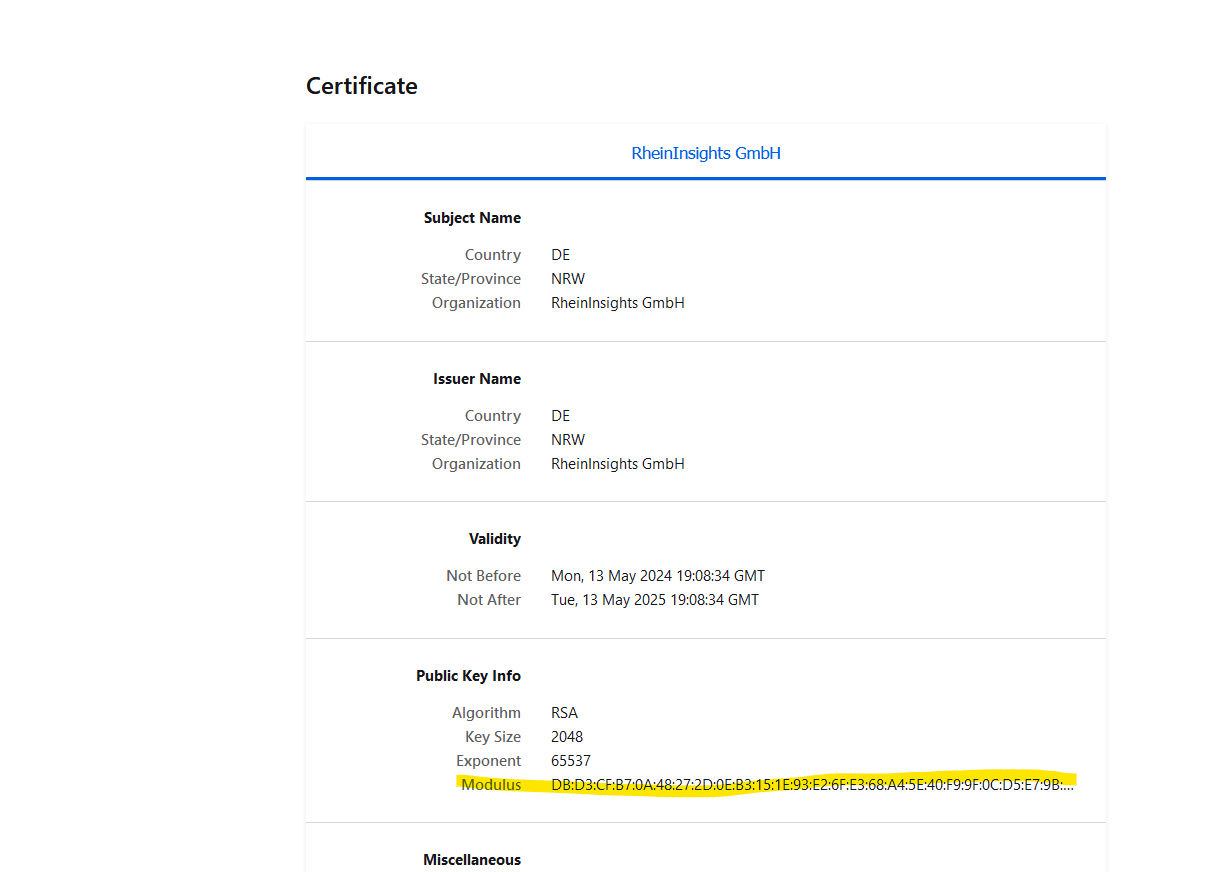

Public keys for SSL certificates: this configuration is needed, if you run the environment with self-signed certificates, or certificates which are not known to the Java key store.

We use a straight-forward approach to validate SSL certificates. In order to render a certificate valid, add the modulus of the public key into this text field. You can access this modulus by viewing the certificate within the browser.

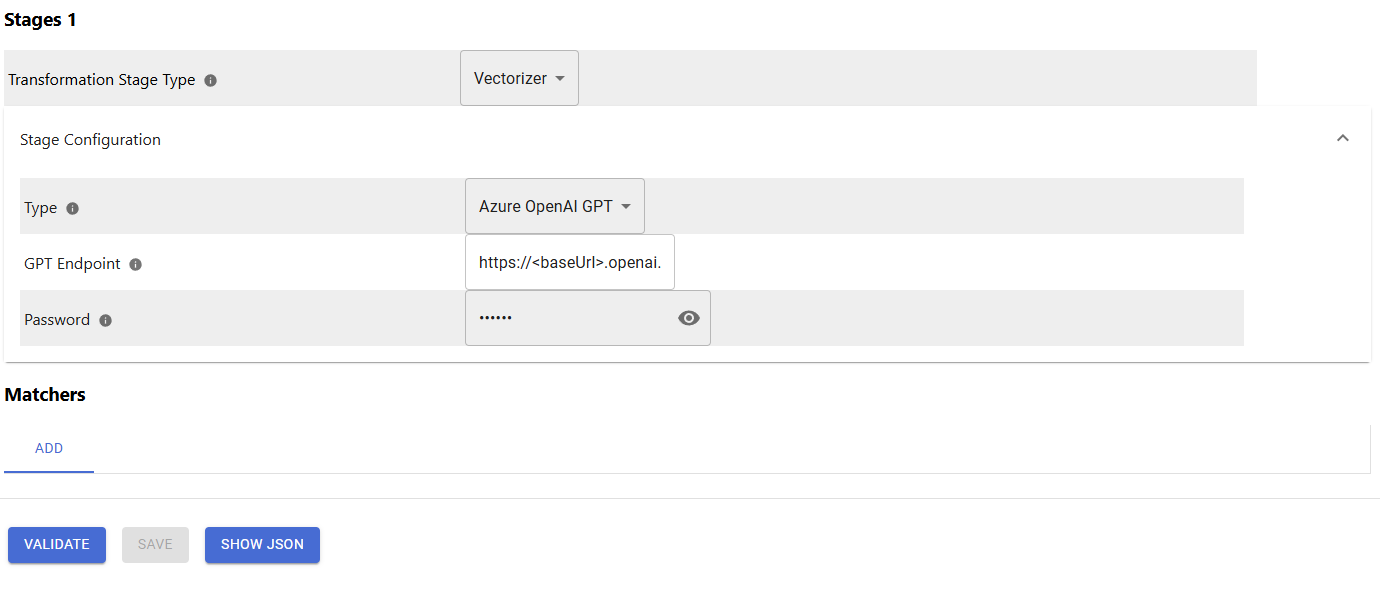

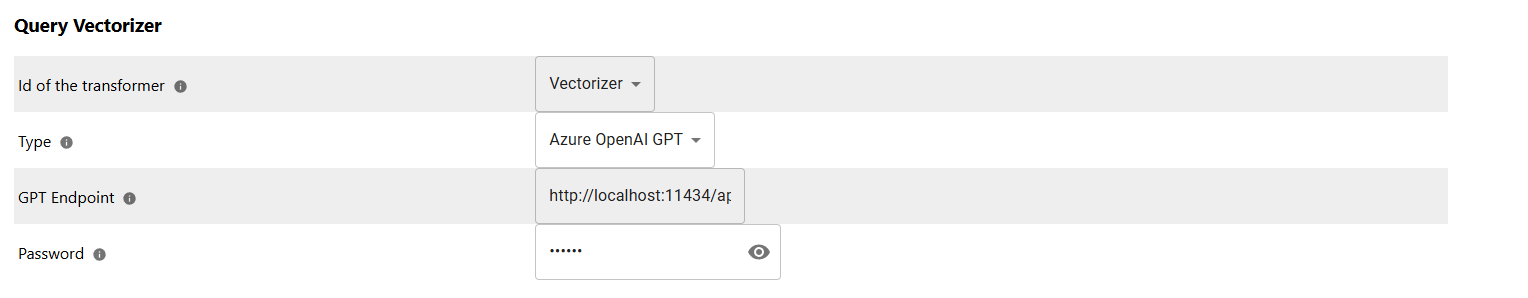

Azure OpenAI GPT configuration

GPT Endpoint: Offer the endpoint such as <https://<baseUrl>>.openai.azure.com/openai/deployments/<deploymentName>/chat/embeddings?api-version=<version>

Password: here please add your API key which you can configure in the OpenAI configuration in the Azure portal.