Documentation

Generate Embedding

In order to use vector search or hybrid vector search, you need to generate a vector-representation of your query. This is done by using a large language model embedding.

At query time, it automatically transforms the input query into a vector representation which is then sent to the vector fields of the search engine. Which fields were configured as vector fields, can be looked up in the respective search engine configuration dialogs (cf. the documentation at Search Engines ).

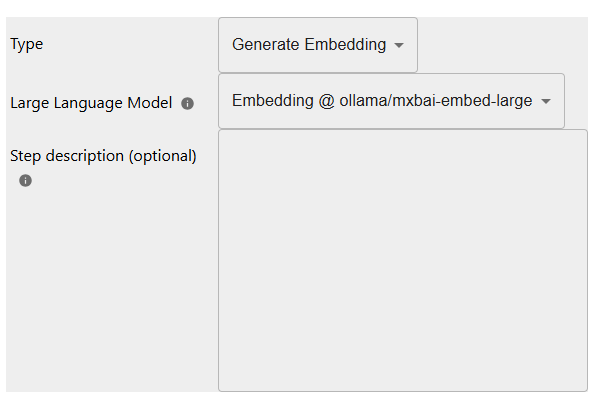

Therefore, you need to configure a query vectorizer as a query processing stage. This query stage has the following options.

Configuration

Large language model: here you need to choose one of the defined large language model embeddings in Managing Large Language Models