Documentation

Managing Large Language Models

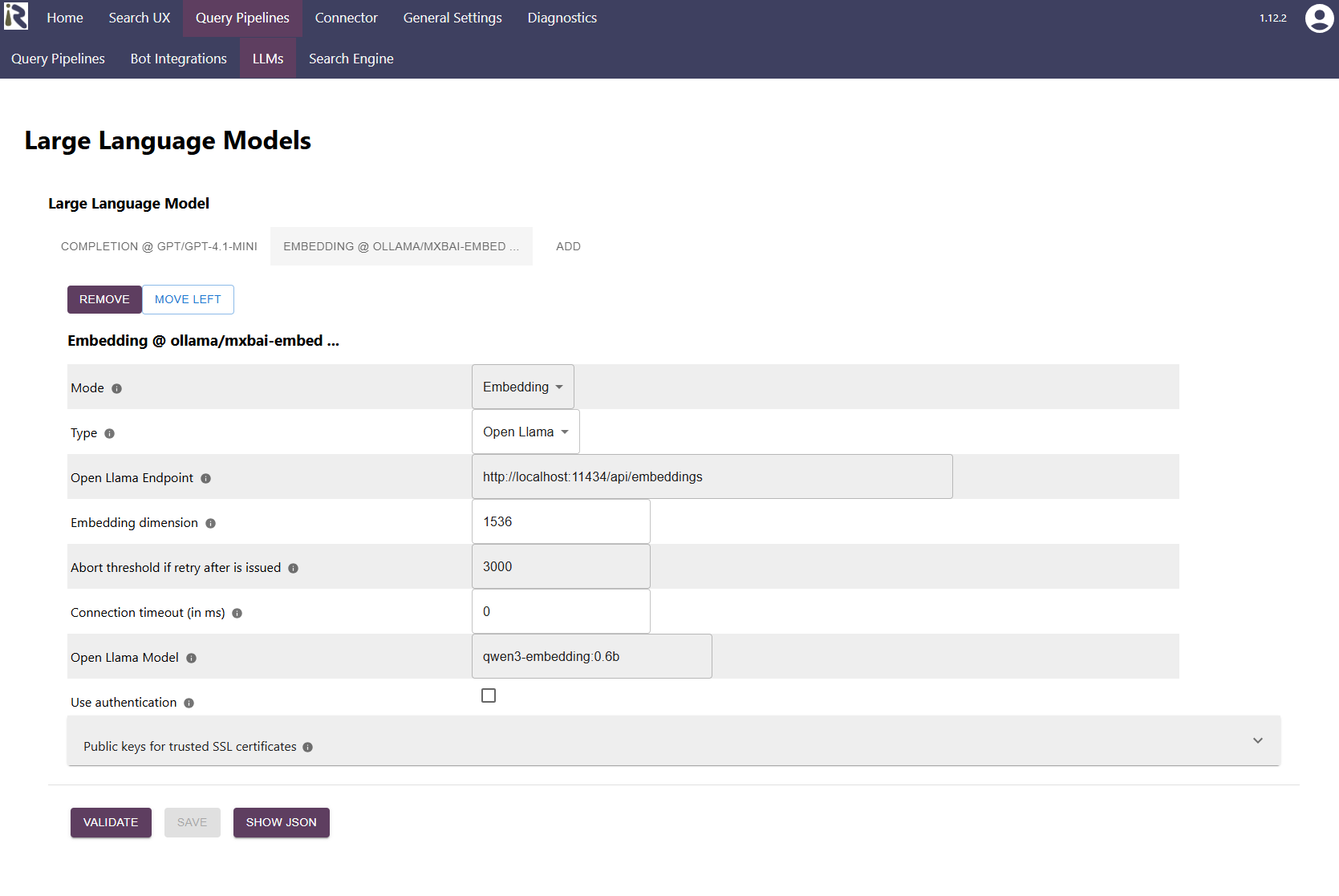

Large language models, in particular completions and embeddings, are used at various places in the RheinInsights Retrieval Suite. For the query pipelines, you can find the configuration at

RheinInsights Admin UI > Query Pipelines > LLMs

In this dialog, you can add or remove large language model. Right now, the Suite supports local deployments with Ollama and deployments at Azure OpenAI.

Configuration

The configuration parameters are

Mode: Choose embedding to generate vector representations for inputs or completion

Type: Open Llama or Azure OpenAI

Azure OpenAI Endpoint Settings

GPT Endpoint, which is the URL to the respective endpoint. For instance https://<yourdeploymend>.openai.azure.com/openai/deployments/gpt-4.1-mini/chat/embeddings?api-version=2025-01-01-preview for embeddings and https://<yourdeploymend>.openai.azure.com/openai/deployments/gpt-4.1-mini/chat/completions?api-version=2025-01-01-preview for completions.

Embedding Dimension: This is to fill or truncate a vector representation to match the schema

Abort threshold: this tells the client to abort an operation, if it takes to long - for instance, if you exceeded the quota.

Connection timeout in ms tells the client how long to wait until a connection to the endpoint is opened.

Password: this is your API key

Open Llama Endpoint Settings

Open Llama Endpoint, which is the URL to the respective endpoint. For instance http://localhost:11434/api/embeddings for embeddings and http://localhost:11434/api/chat for completions.

Embedding Dimension: This is to fill or truncate a vector representation to match the schema

Abort threshold: this tells the client to abort an operation, if it takes to long - for instance, if you exceeded the quota.

Connection timeout in ms tells the client how long to wait until a connection to the endpoint is opened.

Open llama model: This is the model to use. It must have been pulled before using it.

Use authentication: Allows for basic auth with user name and password

Public key for trusted SSL certificates: This allows for adding an HTTPS key for a self signed certificate, if the endpoint runs on https with such.Open Llama Endpoint Settings

Open Llama Endpoint, which is the URL to the respective endpoint. For instance http://localhost:11434/api/embeddings for embeddings and http://localhost:11434/api/chat for completions.

Embedding Dimension: This is to fill or truncate a vector representation to match the schema

Abort threshold: this tells the client to abort an operation, if it takes to long - for instance, if you exceeded the quota.

Connection timeout in ms tells the client how long to wait until a connection to the endpoint is opened.

Open llama model: This is the model to use. It must have been pulled before using it.

Use authentication: Allows for basic auth with user name and password

Public key for trusted SSL certificates: This allows for adding an HTTPS key for a self signed certificate, if the endpoint runs on https with such.